Kind k8s cluster w/ containerlab w/o docker network

Why

Using kind to spin up fast k8s clusters is great, but when it comes down to networking part e.g. multiple interfaces on the nodes or connect nodes to a complex network fabric, kind is limited. Containerlab on the other side makes the build of network fabric easy. Containerlab and kind do work together but AFAICT it works using a secondary interface on the node, while the primary (=default routing) should remain to provide API access and internet connectivity.

How to to drop the docker network

This means in practise to remove the eth0 interface from all k8s nodes and keep the cluster healthy

and connected.

- Configure

cluster.yamlkind configuration to patch kubeadm and replace the node IPs/Certs. - Configure

clab.topo.yamlto do the needed fabric and create interfaces in theext-containersof kind, as well as the need host level networking. - Start the clab in the background

sudo containerlab -t topo.clab.yaml deploy &. - Start the kind cluster creation

kind create cluster -v 1 --config cluster.yaml. - Once ready remove

eth0, fix DNS namespace to all k8s/kind nodes. - Sed the

.kube/configto replace thelocalhost:portto the desired API IP.

The important note is that step 2,3 must be in parallel, because clab is waiting for containers to be created, and kind/kubeadm process is waiting for networking to be working to proceed.

Setup

Here is the cluster.yaml file, we pick IP 10.10.10.0/24 for the nodes:

---

apiVersion: kind.x-k8s.io/v1alpha4

kind: Cluster

name: k8s

networking:

ipFamily: dual

disableDefaultCNI: false

podSubnet: "10.1.0.0/16,fd00:10:1::/63"

serviceSubnet: "10.2.0.0/16,fd00:10:2::/108"

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: ClusterConfiguration

controlPlaneEndpoint: "10.10.10.102:6443"

apiServer:

certSANs: [localhost, "127.0.0.1","10.10.10.102"]

- |

kind: JoinConfiguration

controlPlane:

localAPIEndpoint:

advertiseAddress: "10.10.10.102"

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.10.10.102, fd00:10:10::102

- |

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: "10.10.10.102"

bindPort: 6443

nodeRegistration:

kubeletExtraArgs:

node-ip: "10.10.10.102,fd00:10:10:10::102"

- role: worker

labels:

metallb-speaker: ""

kubeadmConfigPatches:

- |

kind: ClusterConfiguration

controlPlaneEndpoint: "10.10.10.102:6443"

apiServer:

certSANs: [localhost, "127.0.0.1","10.10.10.102"]

- |

kind: JoinConfiguration

discovery:

bootstrapToken:

apiServerEndpoint: 10.10.10.102:6443

nodeRegistration:

kubeletExtraArgs:

node-ip: "10.10.10.103,fd00:10:10:10::103"

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: "10.10.10.103,fd00:10:10:10::103"

The topo.clab.yaml should be tailored to the desired networking testing.

It should make sure that:

- K8s/kind nodes are connected in a way that the CNI will become healthy. E.g. when using kindnet all nodes should be in the same L3 network.

- Networking configuration (routing/iptables) inside the containers namespace and in the host network is providing internet access and local API access.

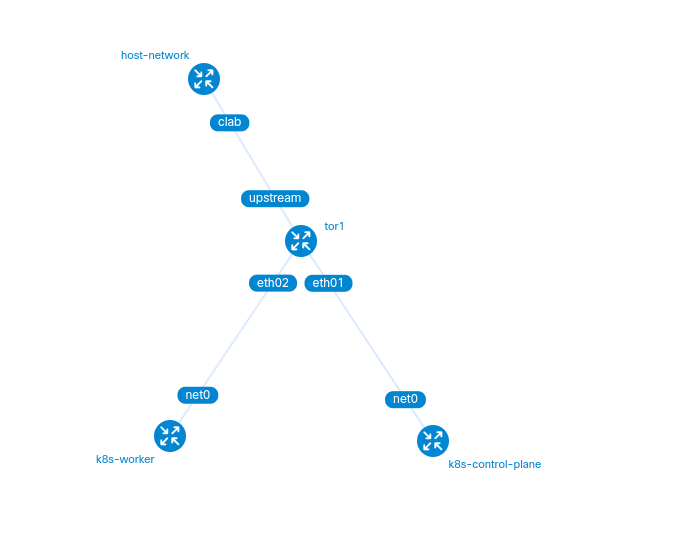

Example topology file

---

name: vlab

mgmt:

network: mgmtnet

ipv4-subnet: 172.100.100.0/24

ipv6-subnet: ''

topology:

nodes:

host-network:

kind: linux

image: quay.io/karampok/snife:latest

network-mode: host

exec:

- ip addr add 169.254.10.9/30 dev clab

- ip route add 10.10.10.0/24 via 169.254.10.10

- iptables -t nat -I POSTROUTING -o bond0 --source 10.10.10.0/24 -j MASQUERADE

# bond0 = $(ip -json route get 8.8.8.8 | jq -r .[0].dev)

# iptables -P FORWARD ACCEPT

# check firewalls do not block traffic

tor1:

kind: linux

image: quay.io/frrouting/frr:9.0.2

network-mode: none

binds:

- conf/tor0/daemons:/etc/frr/daemons

- conf/tor0/frr.conf:/etc/frr/frr.conf

- conf/tor0/vtysh.conf:/etc/frr/vtysh.conf

exec:

- ip link set dev eth01 address 10:00:00:00:00:01

- ip link set dev eth02 address 10:00:00:00:00:02

- ip link set dev eth01 up

- ip link set dev eth02 up

- ip link add name br0 type bridge

- ip link set dev br0 address 10:00:00:00:00:10

- ip link set eth01 master br0

- ip link set eth02 master br0

- ip addr add 10.10.10.10/24 dev br0

- ip addr add fd00:10:10:10::10/64 dev br0

- ip link set dev br0 up

- ip addr add 169.254.10.10/30 dev upstream

- ip route add default via 169.254.10.9 src 10.10.10.10

# more stuff like vlans/vrfs

# - ip link add link eth01 name eth01.green type vlan id 11

# - ip link add link eth01 name eth01.red type vlan id 12

# - ip link add red type vrf table 120

# - ip link set eth01.red master red

# - ip addr add 10.10.10.20/24 dev eth02.blue

# - ip addr add fd00:10:10:10::20/64 dev eth02.blue

# - ip link add link eth02 name eth02.red type vlan id 12

# - ip link add red type vrf table 120

# - ip link set eth02.red master red

# - ip link set dev red up

k8s-control-plane:

kind: ext-container

network-mode: none

exec:

- ip link set dev net0 address de:ad:be:ff:11:01

- ip addr add dev net0 10.10.10.102/24

- ip addr add dev net0 fd00:10:10:10::102/64

- ip link set dev net0 up

- ip link add link net0 name green type vlan id 11

- ip link set dev green up

- ip link add link net0 name red type vlan id 12

- ip link set dev red up

- ip route delete default

- ip route add default via 10.10.10.10

k8s-worker:

kind: ext-container

network-mode: none

exec:

- ip link set dev net0 address de:ad:be:ff:11:02

- ip addr add dev net0 10.10.10.103/24

- ip addr add dev net0 fd00:10:10:10::103/64

- ip link set dev net0 up

- ip link add link net0 name green type vlan id 11

- ip link set dev green up

- ip link add link net0 name red type vlan id 12

- ip link set dev red up

- ip route delete default

- ip route add default via 10.10.10.10

links:

- endpoints: ["tor1:eth01", "k8s-control-plane:net0"]

- endpoints: ["tor1:eth02", "k8s-worker:net0"]

- endpoints: ["tor1:upstream", "host-network:clab"]

The rest which is remove eth0 , fix DNS and restore local k8s API access.

for node in $(kind get nodes --name k8s); do \

docker network disconnect kind $node; \

docker exec $node /bin/bash -c " echo 'nameserver 8.8.8.8' > /etc/resolv.conf"; \

done

sed -i 's|server: https://127.0.0.1:[0-9]*|server: https://10.10.10.102:6443|' ~/.kube/config

Run

sudo containerlab -t topo.clab.yaml deploy &

kind create cluster -v 1 --config cluster.yaml

Creating cluster "k8s" ...

DEBUG: docker/images.go:58] Image: kindest/node:v1.29.2@sha256:51a1434a5397193442f0be2a297b488b6c919ce8a3931be0ce822606ea5ca245 present locally

✓ Ensuring node image (kindest/node:v1.29.2) 🖼

INFO[0000] Containerlab v0.57.3 started

INFO[0000] Parsing & checking topology file: topo.clab.yaml

INFO[0000] Creating docker network: Name="mgmtnet", IPv4Subnet="172.100.100.0/24", IPv6Subnet="", MTU=0

⠈⠁ Preparing nodes 📦 📦 INFO[0000] Creating lab directory: /home/kka/workspace/github.com/metallb/clab/dev-env/clab/clab-vlab

⠈⠱ Preparing nodes 📦 📦 INFO[0000] Creating container: "host-network"

INFO[0000] Creating container: "tor1"

⢄⡱ Preparing nodes 📦 📦 INFO[0000] failed to disable TX checksum offload for eth0 interface for host-network container

⢎⡰ Preparing nodes 📦 📦 INFO[0001] Created link: tor1:upstream <--> host-network:clab

INFO[0001] failed to disable TX checksum offload for eth0 interface for tor1 container

✓ Preparing nodes 📦 📦

INFO[0003] Created link: tor1:eth02 <--> k8s-worker:net0

INFO[0003] Created link: tor1:eth01 <--> k8s-control-plane:net0

✓ Writing configuration 📜

⠈⠁ Starting control-plane 🕹️ INFO[0003] Executed command "ip addr add 169.254.10.9/30 dev clab" on the node "host-network". stdout:

INFO[0003] Executed command "ip route add 10.10.10.0/24 via 169.254.10.10" on the node "host-network". stdout:

INFO[0003] Executed command "iptables -t nat -I POSTROUTING -o bond0 --source 10.10.10.0/24 -j MASQUERADE" on the node "host-network". stdout:

INFO[0003] Executed command "iptables -P FORWARD ACCEPT" on the node "host-network". stdout:

INFO[0003] Executed command "ip link set dev eth01 address 10:00:00:00:00:01" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set dev eth02 address 10:00:00:00:00:02" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set dev eth01 up" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set dev eth02 up" on the node "tor1". stdout:

INFO[0003] Executed command "ip link add name br0 type bridge" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set dev br0 address 10:00:00:00:00:10" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set eth01 master br0" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set eth02 master br0" on the node "tor1". stdout:

INFO[0003] Executed command "ip addr add 10.10.10.10/24 dev br0" on the node "tor1". stdout:

INFO[0003] Executed command "ip addr add fd00:10:10:10::10/64 dev br0" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set dev br0 up" on the node "tor1". stdout:

INFO[0003] Executed command "ip addr add 169.254.10.10/30 dev upstream" on the node "tor1". stdout:

INFO[0003] Executed command "ip link add veth0 type veth peer name veth1" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set veth0 master br0" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set veth0 up" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set veth1 up" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set dev veth1 address 10:00:00:00:00:00" on the node "tor1". stdout:

INFO[0003] Executed command "ip route add default via 169.254.10.9 src 10.10.10.10" on the node "tor1". stdout:

INFO[0003] Executed command "ip link set dev net0 address de:ad:be:ff:11:02" on the node "k8s-worker". stdout:

INFO[0003] Executed command "ip addr add dev net0 10.10.10.103/24" on the node "k8s-worker". stdout:

INFO[0003] Executed command "ip addr add dev net0 fd00:10:10:10::103/64" on the node "k8s-worker". stdout:

INFO[0003] Executed command "ip link set dev net0 up" on the node "k8s-worker". stdout:

INFO[0003] Executed command "ip link add link net0 name green type vlan id 11" on the node "k8s-worker". stdout:

INFO[0003] Executed command "ip link set dev green up" on the node "k8s-worker". stdout:

INFO[0003] Executed command "ip link add link net0 name red type vlan id 12" on the node "k8s-worker". stdout:

INFO[0003] Executed command "ip link set dev red up" on the node "k8s-worker". stdout:

INFO[0003] Executed command "ip route delete default" on the node "k8s-worker". stdout:

INFO[0003] Executed command "ip route add default via 10.10.10.10" on the node "k8s-worker". stdout:

INFO[0003] Executed command "ip link set dev net0 address de:ad:be:ff:11:01" on the node "k8s-control-plane". stdout:

INFO[0003] Executed command "ip addr add dev net0 10.10.10.102/24" on the node "k8s-control-plane". stdout:

INFO[0003] Executed command "ip addr add dev net0 fd00:10:10:10::102/64" on the node "k8s-control-plane". stdout:

INFO[0003] Executed command "ip link set dev net0 up" on the node "k8s-control-plane". stdout:

INFO[0003] Executed command "ip link add link net0 name green type vlan id 11" on the node "k8s-control-plane". stdout:

INFO[0003] Executed command "ip link set dev green up" on the node "k8s-control-plane". stdout:

INFO[0003] Executed command "ip link add link net0 name red type vlan id 12" on the node "k8s-control-plane". stdout:

INFO[0003] Executed command "ip link set dev red up" on the node "k8s-control-plane". stdout:

INFO[0003] Executed command "ip route delete default" on the node "k8s-control-plane". stdout:

INFO[0003] Executed command "ip route add default via 10.10.10.10" on the node "k8s-control-plane". stdout:

INFO[0003] Adding containerlab host entries to /etc/hosts file

INFO[0003] Adding ssh config for containerlab nodes

+---+------------------------+--------------+----------------------------------------------------------------------------------------------+---------------+---------+----------------+----------------------+

| # | Name | Container ID | Image | Kind | State | IPv4 Address | IPv6 Address |

+---+------------------------+--------------+----------------------------------------------------------------------------------------------+---------------+---------+----------------+----------------------+

| 1 | k8s-control-plane | 6cf7edcb38e6 | kindest/node:v1.29.2@sha256:51a1434a5397193442f0be2a297b488b6c919ce8a3931be0ce822606ea5ca245 | ext-container | running | 172.20.20.3/24 | 2001:172:20:20::3/64 |

| 2 | k8s-worker | a2ecdf963f76 | kindest/node:v1.29.2@sha256:51a1434a5397193442f0be2a297b488b6c919ce8a3931be0ce822606ea5ca245 | ext-container | running | 172.20.20.2/24 | 2001:172:20:20::2/64 |

| 3 | clab-vlab-host-network | a6a72db33668 | quay.io/karampok/snife:latest | linux | running | N/A | N/A |

| 4 | clab-vlab-tor1 | 3ace245e590e | quay.io/frrouting/frr:9.0.2 | linux | running | N/A | N/A |

+---+------------------------+--------------+----------------------------------------------------------------------------------------------+---------------+---------+----------------+----------------------+

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-k8s"

You can now use your cluster with:

kubectl cluster-info --context kind-k8s

Not sure what to do next? 😅 Check out https://kind.sigs.k8s.io/docs/user/quick-start/

sed -i 's|server: https://127.0.0.1:[0-9]*|server: https://10.10.10.102:6443|' ~/.kube/config

[I] kka@f-t14s ~/w/g/m/c/d/clab (clab↑1 ✔)> k get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-control-plane Ready control-plane 22m v1.29.2 10.10.10.102 <none> Debian GNU/Linux 12 (bookworm) 6.10.10-200.fc40.x86_64 containerd://1.7.13

k8s-worker Ready <none> 21m v1.29.2 10.10.10.103 <none> Debian GNU/Linux 12 (bookworm) 6.10.10-200.fc40.x86_64 containerd://1.7.13

[I] kka@f-t14s ~/w/g/m/c/d/clab (clab↑1 ✔)> k get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-76f75df574-fqxlh 1/1 Running 0 22m

kube-system coredns-76f75df574-gnhp2 1/1 Running 0 22m

kube-system etcd-k8s-control-plane 1/1 Running 0 22m

kube-system kindnet-b7vr6 1/1 Running 1 (20m ago) 22m

kube-system kindnet-wnkmg 1/1 Running 0 21m

kube-system kube-apiserver-k8s-control-plane 1/1 Running 0 22m

kube-system kube-controller-manager-k8s-control-plane 1/1 Running 0 22m

kube-system kube-proxy-9bdvf 1/1 Running 0 21m

kube-system kube-proxy-srj5s 1/1 Running 0 22m

kube-system kube-scheduler-k8s-control-plane 1/1 Running 0 22m

local-path-storage local-path-provisioner-7577fdbbfb-f4mjw 1/1 Running 0 22m

Network explained

docker exec -it k8s-control-plane /bin/bash

root@k8s-control-plane:/# ip --br a s

lo UNKNOWN 127.0.0.1/8 ::1/128

...

net0@if835 UP 10.10.10.102/24 fd00:10:10:10::102/64 fe80::a8c1:abff:fe5e:c6a4/64

root@k8s-control-plane:/# ip route

default via 10.10.10.10 dev net0

...

10.10.10.0/24 dev net0 proto kernel scope link src 10.10.10.102

The working tcpdump (taken over all relevant network namespaces) show the packet path.

Node to Node

That traffic is going through the switching (bridge) part of the router

net0 Out IP 10.10.10.102 > 10.10.10.103: ICMP echo request, id 15, seq 1, length 64

eth01 P IP 10.10.10.102 > 10.10.10.103: ICMP echo request, id 15, seq 1, length 64

eth02 Out IP 10.10.10.102 > 10.10.10.103: ICMP echo request, id 15, seq 1, length 64

net0 In IP 10.10.10.102 > 10.10.10.103: ICMP echo request, id 16, seq 1, length 64

net0 Out IP 10.10.10.103 > 10.10.10.102: ICMP echo reply, id 16, seq 1, length 64

eth02 P IP 10.10.10.103 > 10.10.10.102: ICMP echo reply, id 15, seq 1, length 64

eth01 Out IP 10.10.10.103 > 10.10.10.102: ICMP echo reply, id 15, seq 1, length 64

net0 In IP 10.10.10.103 > 10.10.10.102: ICMP echo reply, id 15, seq 1, length 64

Node to internet

Host-network is responsible to forward and MASQUERADE and route back the traffic.

# K8s Node

net0 Out IP 10.10.10.102 > 8.8.8.8: ICMP echo request, id 13, seq 1, length 64

# Router from CLAB

eth01 P IP 10.10.10.102 > 8.8.8.8: ICMP echo request, id 13, seq 1, length 64

br0 In IP 10.10.10.102 > 8.8.8.8: ICMP echo request, id 13, seq 1, length 64

upstream Out IP 10.10.10.102 > 8.8.8.8: ICMP echo request, id 13, seq 1, length 64

# Host network

clab In IP 10.10.10.102 > 8.8.8.8: ICMP echo request, id 13, seq 1, length 64

bond0 Out IP 192.168.149.212 > 8.8.8.8: ICMP echo request, id 13, seq 1, length 64

bond0 In IP 8.8.8.8 > 192.168.149.212: ICMP echo reply, id 13, seq 1, length 64

clab Out IP 8.8.8.8 > 10.10.10.102: ICMP echo reply, id 13, seq 1, length 64

upstream In IP 8.8.8.8 > 10.10.10.102: ICMP echo reply, id 13, seq 1, length 64

br0 Out IP 8.8.8.8 > 10.10.10.102: ICMP echo reply, id 13, seq 1, length 64

eth01 Out IP 8.8.8.8 > 10.10.10.102: ICMP echo reply, id 13, seq 1, length 64

net0 In IP 8.8.8.8 > 10.10.10.102: ICMP echo reply, id 13, seq 1, length 64

Local k8s API access

That traffic cannot not use the expose port from docker to localhost, but now the network path is through the interface that connects host-network and clab-router.

clab Out IP 169.254.10.9.52556 > 10.10.10.102.6443: Flags [S], seq 4290152719, win 56760, options [mss 9460,sackOK,TS val 2001072867 ecr 0,nop,wscale 7], length 0

upstream In IP 169.254.10.9.52556 > 10.10.10.102.6443: Flags [S], seq 4290152719, win 56760, options [mss 9460,sackOK,TS val 2001072867 ecr 0,nop,wscale 7], length 0

br0 Out IP 169.254.10.9.52556 > 10.10.10.102.6443: Flags [S], seq 4290152719, win 56760, options [mss 9460,sackOK,TS val 2001072867 ecr 0,nop,wscale 7], length 0

eth01 Out IP 169.254.10.9.52556 > 10.10.10.102.6443: Flags [S], seq 4290152719, win 56760, options [mss 9460,sackOK,TS val 2001072867 ecr 0,nop,wscale 7], length 0

net0 In IP 169.254.10.9.52556 > 10.10.10.102.6443: Flags [S], seq 4290152719, win 56760, options [mss 9460,sackOK,TS val 2001072867 ecr 0,nop,wscale 7], length 0