Chaining CNI Plugins

CNI (Container Network Interface) supports since version 0.3.0 chained plugins. This is a feature which can potential solve various cases. In the same time it keeps the container network stack clean. This post explains how chained plugins can be used in low level and how someone can extend the chain by adding a custom made CNI plugin. Whether your container orchestrator supports plugin chaining depends on which Container Runtime or which version is being used.

Chained Plugins

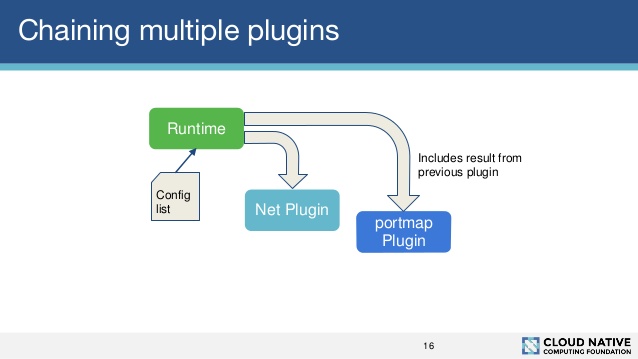

Chaining CNI plugins is different from a multi-call on CNI plugins in terms that that each plugin call depends on an information that was created in the previous step. In most cases the information transferred is the container IP.

The following figure taken for a CNI presentation illustrates plugin chaining.

Container Runtime Behavior

A detailed description of how a Container Runtime should chain plugins can be found in the CNI spec

To summarize the ADD part:

For the ADD action, the runtime MUST also add a prevResult field to the configuration JSON of any plugin after the first one, which MUST be the Result of the previous plugin (if any) in JSON format (see below). For the ADD action, plugins SHOULD echo the contents of the prevResult field to their stdout to allow subsequent plugins (and the runtime) to receive the result, unless they wish to modify or suppress a previous result. Plugins are allowed to modify or suppress all or part of a prevResult. However, plugins that support a version of the CNI specification that includes the prevResult field MUST handle prevResult by either passing it through, modifying it, or suppressing it explicitly. It is a violation of this specification to be unaware of the prevResult field.

The runtime MUST also execute each plugin in the list with the same environment. For the DEL action, the runtime MUST execute the plugins in reverse-order.

A such description will lead to the following bash script.

#! /bin/bash

netconf=`cat`

version=$(echo "$netconf"| jq -r '.cniVersion')

name=$(echo "$netconf"| jq -r '.name')

res="{}"

entries=$(echo "${netconf}" | jq -cr '.plugins[] | @base64 ')

if [ "$CNI_COMMAND" == "DEL" ]; then #Reverse if DELETE

entries=$(echo "$entries"|tac) #yes there is such unix command

fi

for entry in $entries; do

conf=$(echo "$entry" | base64 --decode | jq -r --arg n $name --arg v $version '. + {name:$n, cniVersion:$v}')

echo "Applying $(echo "$conf"| jq -r '.type')"

conf2=$(echo $conf | jq -r --argjson p $res '. + {prevResult:$p}')

# inject configuration

plugin="$CNI_PATH"/$(echo "$conf2"| jq -r '.type')

res=$(echo $"$conf2" | "$plugin"| jq -c .)

if [ "$res" == "" ];then

res="{}"

fi

done

echo "$res"| jq .

The above script is getting too complicated and also not complete, for example CNI ARGs

behavior is missing. For that reason, it is recommended to use the library

provided by the containernetworking project. In our simple case, we use the binary

cnitool which

is a minimal wrapper of the library calls.

Demo Run

In the context of CNI, a container is simply a network namespace.

ip netns add cake

A json configuration is required in order the Container Runtime to properly

call the CNI plugins in they right order and with the right inputs. A such

configuration looks like that:

export NETCONFPATH=/opt/cni/netconfs

export CNI_PATH=/opt/cni/bin/

cat > $NETCONFPATH/30-chained.conflist <<EOF

{

"cniVersion": "0.3.1",

"name": "mynet",

"plugins": [

{

"type": "bridge",

"isGateway": true,

"ipMasq": true,

"bridge": "br0",

"ipam": {

"type": "host-local",

"subnet": "10.10.10.0/24",

"routes": [

{ "dst": "0.0.0.0/0" }

],

"dataDir": "/run/ipam-out-net"

},

"dns": {

"nameservers": [ "8.8.8.8" ]

}

},

{

"type": "portmap",

"capabilities": {"portMappings": true},

"snat": false

}

]

}

EOF

The interesting part is the "capabilities": {"portMappings": true}, of the

portmap plugin. It basically means that the Container Runtime is supposed to provide

some runtime arguments to the plugin with the name portMappings. The format

of the runtime arguments is a contract between the Container Runtime and the CNI

plugin without any restriction from the CNI specification. For example, for the

portmap plugin it should look like that

# runtime

export CAP_ARGS='{

"portMappings": [

{

"hostPort": 9090,

"containerPort": 80,

"protocol": "tcp"

}

]

}'

which matches what the code of the plugin expects.

Having the static network config and the runtime variables in place, we can can execute the following

cnitool add mynet /var/run/netns/cake

Custom Plugin in the Chain

We will extend the chain with two plugins:

- One that adds a custom

iptable masq rulein order to differiate the traffic. - One that is a noop plugin that is being used for debugging.

The source code of the plugin can be found at github

go install github.com/karampok/diktyo/plugins/noop

go install github.com/karampok/diktyo/plugins/ipmasq

The initial configuration should include the above two, which ask specific runtime arguments.

cat > $NETCONFPATH/30-chained.conflist <<EOF

{

"cniVersion": "0.3.1",

"name": "mynet",

"plugins": [

{

"type": "bridge",

"isGateway": true,

"ipMasq": false,

"bridge": "br0",

"ipam": {

"type": "host-local",

"subnet": "10.10.10.0/24",

"routes": [

{ "dst": "0.0.0.0/0" }

],

"dataDir": "/run/ipam-out-net"

},

"dns": {

"nameservers": [ "8.8.8.8" ]

}

},

{

"type":"ipmasq",

"tag":"CNI-SNAT-X",

"capabilities": {"masqEntries": true}

},

{

"type": "portmap",

"capabilities": {"portMappings": true},

"snat": false

},

{

"type":"noop",

"debug":true,

"capabilities": {

"portMappings": true,

"masqEntries": true

},

"debugDir": "/var/vcap/data/cni-configs/net-debug"

}

]

}

EOF

The runtime arguments are being provided as usual through the CAP_ARGS

export CAP_ARGS='{

"portMappings": [

{

"hostPort": 9090,

"containerPort": 80,

"protocol": "tcp"

}

],

"masqEntries": [

{

"external": "10.0.2.15:5000-5010",

"destination": "8.8.8.8/32",

"protocol": "tcp",

"description": "mark traffic to google dns server"

}

]

}'

Again we call the cnitool

cnitool add mynet /var/run/netns/cake

As a result the container will have a custom iptable rule that SNATs the traffic

originating from the container to the right source port range.

Chain POSTROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 CNI-SNAT-X all -- * * 0.0.0.0/0 0.0.0.0/0 /* ipmasq cni plugin */

Chain CNI-SNAT-X (1 references)

pkts bytes target prot opt in out source destination

0 0 SNAT tcp -- * * 10.10.10.3 8.8.8.8 /* cnitool-a992a834112856df626c:_mark_traffic_to_google_dns_server */ to:10.0.2.15:5000-5010

The noop plugin writes all the stdins and previous results in

a file for debugging or auditing reasons.

cat /var/vcap/data/cni-configs/net-c/eth0/add_1519769962.json | jq .c

{

"capabilities": {

"masqEntries": true,

"metadata": true,

"portMappings": true

},

"cniVersion": "0.3.1",

"debug": true,

"debugDir": "/var/vcap/data/cni-configs/net-debug",

"name": "mynet",

"prevResult": {

"cniVersion": "0.3.1",

"dns": {

"nameservers": [

"8.8.8.8"

]

},

"interfaces": [

{

"mac": "7e:d0:b5:97:9e:63",

"name": "br0"

},

{

"mac": "7e:d0:b5:97:9e:63",

"name": "veth8fa1b01d"

},

{

"name": "eth0",

"sandbox": "/var/run/netns/cake"

}

],

"ips": [

{

"address": "10.10.10.2/24",

"gateway": "10.10.10.1",

"interface": 2,

"version": "4"

}

],

"routes": [

{

"dst": "0.0.0.0/0"

}

]

},

"runtimeConfig": {

"masqEntries": [

{

"description": "allow production traffic",

"destination": "8.8.8.8/32",

"external": "10.0.2.15:5000-5010",

"protocol": "tcp"

}

],

"portMappings": [

{

"containerPort": 80,

"hostPort": 9090,

"protocol": "tcp"

}

]

},

"type": "noop"

}

Conclusion

The tricky part with the custom plugin is that the Container Runtime should add to add custom runtime arguments. A such behavior might not be supported out of the box.

CNI plugins in chaining mode seems a good fit to add custom behavior on the network stack. Important is not to “abuse” but try to solve only network related topic. CNI chaining combines different CNI plugins and places the right “responsibility” to the different CNI plugins enabling to build complex and efficient network behavior stacks.